How Does TView's Optimization Work?

TView's optimization method recognizes that the differences between plan results typically look more like rolling meadows than like sharp mountain peaks. Methods that rely upon finding those sharp peaks (e.g., "hill climbing") can fail in finding their way to the highest point in a gentler landscape.

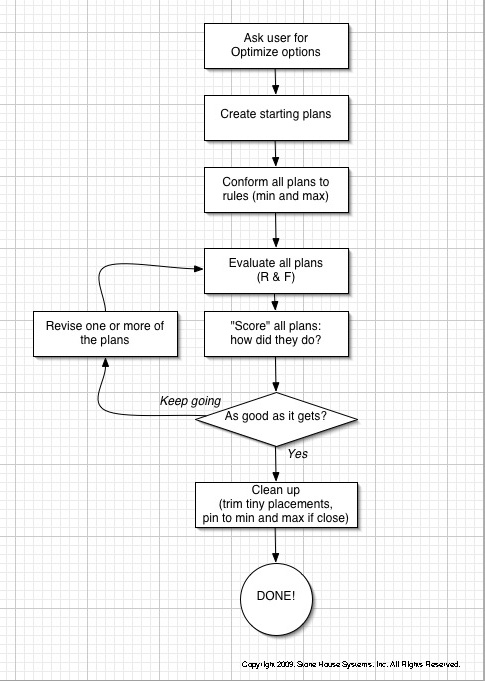

To do this, TView uses a "cloud" of plan possibilities. Most commonly we start by creating a set of plans each of which is heavy in one daypart or line entry and light in the others. We also usually make sure that the current plan is one of the starting points. So, the "cloud" is defined by a set of points, perhaps 10 or more.

When all the plans in this "cloud" are evaluated, some clearly are winners, so that gives us a direction to start searching. Plans that were less successful are moved slightly in the direction of the winning plan, and this also has the effect of mixing elements in the plan to begin becoming more practical.

Thus, as we proceed in the optimization, the cloud changes shape and moves. It can do any of the following:

| • | The cloud can move in the direction of promising results: Plans are revised to use elements that seem to improve results. |

| • | The cloud can expand: If big differences remain between the results of candidate plans but there is no preferred direction, the cloud can expand into new possibilities and mixes. |

| • | The cloud can contract: When we no longer see any obvious direction for improvement, the cloud begins to shrink. Eventually, it becomes small enough that the differences between plans are negligible, and thus we have found a recommendation. |

Here is an overview of TView's optimization process:

This general idea is similar to most optimization strategies. The key difference is that TView works with multiple plans -- a cloud -- all at once.

The problem with "hill-climbing" and other typical strategies that work by refining a single plan is that they are prone to climbing the wrong hill, that is, a so-called local maximum, an area that has better results but may miss the much taller hill nearby.

That's the overall idea, which TView enhances in several ways:

| • | The scoring of plans (in the middle box in the chart above) changes by requested goal. For example, when you choose to optimize based on effective reach, the scoring reflects that and it drives an entirely different search. Improving reach is usually a matter of seeking out ways to add in new unreached viewers, while improving ER is often the exact opposite, to reduce weight in entries and combinations of entries that produce only lightly-exposed viewers. |

| • | The scoring of plans (in the middle box in the chart above) is not limited to a single value, such as reach. When you ask for reach, TView's optimizer also keeps an eye on ER, and vice versa. |

| • | Scoring reflects your "faves", those items you've marked with qualitative "++" to "--" tags. |

| • | TView also controls the development of plans according to your rules and goals. When the cloud bumps into an area that produces plan entries that fail your specified minimums and maximums for an entry, TView will back away from that direction. |

| • | An important but often overlooked consideration is the effect of plan revision on GRPs. Gaining two reach points but losing 33% of GRPs is usually not thought of as a good thing, and TView knows that. This consideration is under user control with a slide switch in TView's optimize dialog. (Read about that here.) |

| • | The optimization can be "run in reverse" to find a plan that meets communication goals but at the lowest budget possible. |

Finally, we should mention another approach. Back around 2002 there was a flurry of interest in program-level optimization. To learn about that, continue on to the next topic, Find the Best Mix of Programs?